Click Here for INL News Amazon Best Seller Books

-

Handy Easy Email and World News Links WebMail

GoogleSearch INLTV.co.uk YahooMail HotMail GMail - news.sky.com/watch-live New York Post nypost.com YouTube

ArtificialIntelligence(AI)AndHumanity

Artificial Intelligence (AI) And Humanity

Click Here for INL News Amazon Best Seller Books

Click Here for INL News Amazon Best Seller Books

Click Here for INL News Amazon Best Seller Books

Click Here for the best range of Amazon Computers

Click Here for INL News Amazon Best Seller Books

Amazon Electronics - Portable Projectors

Dr. Steven Greer Was Offered $2 Billion Dollars To Keep This A Secret UFO UAP

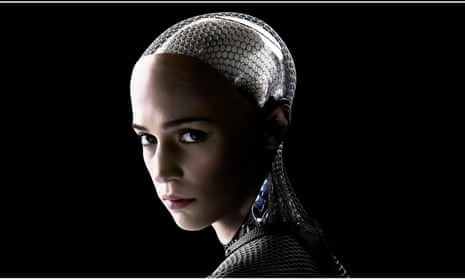

An AI humanoid from the 2014 film Ex Machina. The technology has long featured in Hollywood films but is increasingly becoming part of real life.

Meet Ameca! The World’s Most Advanced Robot _ This Morning

wn.bz-Illuminati-History

CIA Whistleblower_CIAHitman

CIA Illegal Activities Exposed

CIA Hitman Man in Black Joseph Spencer CIA Whistleblower Speaks Out

This video is of a CIA Whistleblower, Joseph Spencer ,

who was a Man In Black operating as a Hitman for the CIA

Bill Gates was a senior C.I.A Officer

Was Michael C. Rupert Murdered?

https://isgp-studies.com/cia-heroin-and-cocaine-drug-trafficking#michael-ruppert-cia-drug-trafficking

This question remains. There is every reason that many powerful groups and people including the CIA would have been keen to see the end of Michael Rupert because he was constantly exposing the CIA's involvement in the distribution of illegal drugs, which Michael Rupert sated was a multi trillion dollar industry. Michael Rupert claimed that if all the illegal drug proceeds where withdrawn from the major banks around the world, this would cause a major depression in the world economy.

Michael Rupert also outed Bill Gates as a senior C.I.A Officer

http://youtubeexposed.com/index.php/cia-illegal-activities-exposed

Existential risk from artificial general intelligence - Wikipedia

https://en.wikipedia.org/wiki/

Existential risk from artificial general intelligence is the hypothesis that substantial progress in artificial general intelligence (AGI) could result in human extinction or some other unrecoverable global catastrophe.[1][2][3]

The existential risk ("x-risk") school argues as follows: The human species currently dominates other species because the human brain has some distinctive capabilities that other animals lack. If AI surpasses humanity in general intelligence and becomes "superintelligent", then it could become difficult or impossible for humans to control. Just as the fate of the mountain gorilla depends on human goodwill, so might the fate of humanity depend on the actions of a future machine superintelligence.

COVID Conspiracy Doc Dies; Doc Group Behind Anti-Trans Laws; Aiding Anti-Vax Group

— This past week in healthcare investigations

by Michael DePeau-Wilson, Enterprise & Investigative Writer, MedPage TodayMay 24, 2023

https://www.medpagetoday.com/

Welcome to the latest edition of Investigative Roundup, highlighting some of the best investigative reporting on healthcare each week.

COVID Conspiracy Doc Dies

Rashid Buttar, DO, a well-documented COVID conspiracy theorist, died days after claiming he had been poisoned, according to the Daily Staropens in a new tab or window.

Buttar claimed in early May that he was given a "poison" that contained "200 times of what was in the vaccine" shortly after an interview with CNNopens in a new tab or window in late 2021, according to the report.

His official cause of death wasn't released, but he spent time in intensive care recently, the Star reported.

Conspiracy theories have cropped up in the wake of his death, according to VICE Newsopens in a new tab or window. Anti-vaxers have claimed that doctors who oppose mainstream medicine are being killed by mysterious forces, and Buttar said he had a stroke in February, which he "appeared to blame on vaccine 'shedding,'" VICE reported.

A member of the "disinformation dozen," Buttar was known for spreading disinformation about the COVID-19 pandemic. Before the pandemic, Buttar was punished by the North Carolina state medical board for his treatment of autism and cancer patients -- including injecting a cancer patient with hydrogen peroxide, according to the Star.

Buttar was born in England and spent most of his life in the U.S. He was 57 when he died.

The Doctor Group Behind Anti-Trans Laws

The movement to pass laws banning gender-affirming care for transgender youth is being driven by a small group of far-right interest groups, according to two reports from the Associated Pressopens in a new tab or window.

One of those groups, a nonprofit called Do No Harmopens in a new tab or window, launched last year to oppose diversity initiatives in medicine, but quickly became a significant leader in efforts to introduce and pass legislation banning healthcare access for transgender youth, according to the reports.

The nonprofit has even drafted model legislation that was used in three states: Montana, Arkansas, and Iowa. One bill signed into law in Montana contained nearly all of the language used in the nonprofit's model, AP reported.

Nephrologist Stanley Goldfarb, MD, is the founder of Do No Harm. He was an associate dean at the University of Pennsylvania's medical school until he retired in 2021.

Medical News from Around the Web

Goldfarb told AP in an email that his nonprofit "works to protect children from extreme gender ideology through original research, coalition-building, testimonials from parents and patients who've lived through deeply troubling experiences, and advocacy for the rigorous, apolitical study of gender dysphoria."

Do No Harm is continuing in its efforts to spread its model legislation to more states. The group had lobbyists registered in Kansas, Missouri, and Tennessee in 2022 and in Florida in 2023.

Wall Street Exec Aids RFK Jr.'s Anti-Vax Group

A veteran Wall Street executive has helped fund an anti-vaccine group founded by 2024 Democratic presidential hopeful Robert F. Kennedy Jr., according to CNBCopens in a new tab or window.

Mark Gorton, the founder and chairperson of Tower Research Capital, said he has given $1 million to Kennedy's group -- the Children's Health Defense -- since 2021. Kennedy, who has been a long-time critic of vaccines for children, was also the chairman of the group before stepping down to run in the 2024 Democratic presidential primaries, which he announced in April.

Children's Health Defense pushed back against COVID-19 vaccines, which helped to boost Kennedy's profile nationally, according to the report. The group more than doubled its fundraising totals from $6 million in 2020 to $15 million in 2021, according to tax documents reviewed by CNBC. Those documents did not reveal names of any specific donors.

Groton said he has met with Kennedy multiple times since donating to the anti-vaccine group.

"I like him a lot. He's a super smart guy. Again, he's not really a politician. He's a corruption fighter," Groton told CNBC.

He also claimed to be working with the Children's Health Defense group staff to advise them on messaging strategies.

Neither the Children's Health Defense group nor Kennedy's campaign would confirm Groton's donation or his involvement with Kennedy.

-

![author['full_name']](https://ci4.googleusercontent.com/proxy/_HPUqG1QIKD5dAUbXeMnzOcqQEOPf-ihfc2qfIhIRj0GMPhaBOP2RoioF3Hb5eIsrrdyzDVcHadPLle0A67sfy_1pz6A3vnVCov7FmnnfARVlxmy1lGQFPsWOg=s0-d-e1-ft#https://clf1.medpagetoday.com/media/images/author/MDePeau-Wilson_188.jpg)

Michael DePeau-Wilson is a reporter on MedPage Today’s enterprise & investigative team. He covers psychiatry, long covid, and infectious diseases, among other relevant U.S. clinical news. Follow

Dr. Rashid Buttar Dies Days After He Said He's Been Poisoned

Alien Disclosure Dr. Steven Greer - Part 3 20th Anniversary NPC

Aliens Have Already Arrived - Dr. Garry Nolan

SALT Connections New York

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12 2023

1 of 6

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12 2023

2 of 6

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12_2023

3 of 6

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12 2023

4 of 6

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12 2023

5 of 6

Dr Greer's UFO Disclosure Groundbreaking National Press Club Event June12 2023

6 of 6

National Press Club Event Disclosure Project Steven Greer An Opinion

Fight For Disclosure Disinformation And Lies

The Survival of Human it Depends On This UAP & UFO Non-Human Retrieval Program

Dr. Steven Greer

“Godfather of AI” Geoffrey Hinton Warns of the “Existential Threat” of AI _ Aman

Could Chat GPT and AI Threaten Human Life

Dr. Steven Greer Was Offered $2 Billion Dollars To Keep This A Secret UFO UAP

DrStephenGreerClassifiedAlienEncountersRevealedBTraumatologist

DrStevenGreer- TalksUFOsPRETTYINTENSE PODCASTEP82

DrStevenGreerDidWeLandOnTheMoon Clip02Ep185

DrStevenGreer-TheBrutalTruthAboutOurGovernment-Clips01Ep185

DrStevenGreerWithDemiLovato

HesNotBeingHonest-WhatsElonMusksConnectionToJeffre Epstein

Find Out About The Astonishing Classified Technologies At The South Pole

Starseeds Conversation With Sophi

Disclosures Social Changes Messag

Pleiades Not Too Young To Support Or

HowToCommunicateWithExtraterrestrials-ETs_TheyDONOTWantYouT KNOW

SIRIUSFromDrSteven Greer-OriginalFullLengthDocumentaryFilm

videos/TheTRUTHAboutJeffre Epsteinw_WhitneyWebb-PBDPodcast_Ep198.mp4

UFOExpertDrGreerRevealsFirstEverPhotoOfAnAlien- ImpulsiveEP107

Elon Musk on Sam Altman and ChatGPT_ I am the reason OpenAI exists

Dr. Steven Greer Mystery Behind UFO- UAPs Alien Phenomenon

And The Secret Government

AI and the future of humanity _ Yuval Noah Harari at the Frontiers Forum

Should We Be Concerned ' Josh Hawley Asks Open AI Head About AI's Effect On Elections

videos/Man & God _ Prof. John Lennox

Edward Snowden and Ben Goertzel on the AI Explosion and Data Privacy

EMERGENCY EPISODE_ Ex-Google Officer Finally Speaks Out On The Dangers Of AI!

Evolution of Boston Dynamic’s Robots [1992-2023]

Open AI CEO, CTO on risks and how AI will reshape society

Is a ban on AI technology good or harmful _ 60 Minutes Australia

videos/India’s role in the AI revolution _ Rahul Gandhi _ Silicon Valley, USA(1)

Open AI CEO_The benefits of the tools outweigh the risks

People DON'T Realize What's Coming! URGENT Wake-Up Call You NEED to Hear _ Charl

MichioKaku_FutureOfHumans-Aliens-SpaceTravel-Physics_LexFridmanPodc.mp4MichioKaku_FutureOfHumans-Aliens-SpaceTravel-Physics_LexFridmanPodc

MichioKakuBreaks Silence_TheUniverseIsn'tLocallyRealAndNothingExists

vJesseVentura_63DocumentsTheGovernmentDoesn'tWantYouToRead

AI poses existential threat and risk to health of millions, experts warn

BMJ Global Health article calls for halt to ‘development of self-improving artificial general intelligence’ until regulation in place

AI could harm the health of millions and pose an existential threat to humanity, doctors and public health experts have said as they called for a halt to the development of artificial general intelligence until it is regulated.

Artificial intelligence has the potential to revolutionise healthcare by improving diagnosis of diseases, finding better ways to treat patients and extending care to more people.

But the development of artificial intelligence also has the potential to produce negative health impacts, according to health professionals from the UK, US, Australia, Costa Rica and Malaysia writing in the journal BMJ Global Health.

The risks associated with medicine and healthcare “include the potential for AI errors to cause patient harm, issues with data privacy and security and the use of AI in ways that will worsen social and health inequalities”, they said.

One example of harm, they said, was the use of an AI-driven pulse oximeter that overestimated blood oxygen levels in patients with darker skin, resulting in the undertreatment of their hypoxia.

But they also warned of broader, global threats from AI to human health and even human existence.

AI could harm the health of millions via the social determinants of health through the control and manipulation of people, the use of lethal autonomous weapons and the mental health effects of mass unemployment should AI-based systems displace large numbers of workers.

“When combined with the rapidly improving ability to distort or misrepresent reality with deep fakes, AI-driven information systems may further undermine democracy by causing a general breakdown in trust or by driving social division and conflict, with ensuing public health impacts,” they contend.

Threats also arise from the loss of jobs that will accompany the widespread deployment of AI technology, with estimates ranging from tens to hundreds of millions over the coming decade.

“While there would be many benefits from ending work that is repetitive, dangerous and unpleasant, we already know that unemployment is strongly associated with adverse health outcomes and behaviour,” the group said.

“Furthermore, we do not know how society will respond psychologically and emotionally to a world where work is unavailable or unnecessary, nor are we thinking much about the policies and strategies that would be needed to break the association between unemployment and ill health,” they said.

But the threat posed by self-improving artificial general intelligence, which, theoretically, could learn and perform the full range of human tasks, is all encompassing, they suggested.

“We are now seeking to create machines that are vastly more intelligent and powerful than ourselves. The potential for such machines to apply this intelligence and power, whether deliberately or not and in ways that could harm or subjugate humans, is real and has to be considered.

“With exponential growth in AI research and development, the window of opportunity to avoid serious and potentially existential harms is closing.

“Effective regulation of the development and use of artificial intelligence is needed to avoid harm,” they warned. “Until such regulation is in place, a moratorium on the development of self-improving artificial general intelligence should be instituted.”

Separately, in the UK, a coalition of health experts, independent factcheckers, and medical charities called for the government’s forthcoming online safety bill to be amended to take action against health misinformation.

“One key way that we can protect the future of our healthcare system is to ensure that internet companies have clear policies on how they identify the harmful health misinformation that appears on their platforms, as well as consistent approaches in dealing with it,” the group wrote in an open letter to Chloe Smith, the secretary of state for science, innovation and technology.

“This will give users increased protections from harm, and improve the information environment and trust in the public institutions.

Signed by institutions including the British Heart Foundation, Royal College of GPs, and Full Fact, the letter calls on the UK government to add a new legally binding duty to the bill, which would require the largest social networks to add new rules to their terms of service governing how they moderate health-based misinformation.

Will Moy, the chief executive of Full Fact, said: “Without this amendment, the online safety bill will be useless in the face of harmful health misinformation.”

A race it might be impossible to stop’: how worried should we be about AI?s are warning machine learning will soon outsmart humans – maybe it’s time for us to take note

Last Monday an eminent, elderly British scientist lobbed a grenade into the febrile anthill of researchers and corporations currently obsessed with artificial intelligence or AI (aka, for the most part, a technology called machine learning). The scientist was Geoffrey Hinton, and the bombshell was the news that he was leaving Google, where he had been doing great work on machine learning for the last 10 years, because he wanted to be free to express his fears about where the technology he had played a seminal role in founding was heading.

To say that this was big news would be an epic understatement. The tech industry is a huge, excitable beast that is occasionally prone to outbreaks of “irrational exuberance”, ie madness. One recent bout of it involved cryptocurrencies and a vision of the future of the internet called “Web3”, which an astute young blogger and critic, Molly White, memorably describes as “an enormous grift that’s pouring lighter fluid on our already smoldering planet”.

We are currently in the grip of another outbreak of exuberance triggered by “Generative AI” – chatbots, large language models (LLMs) and other exotic artefacts enabled by massive deployment of machine learning – which the industry now regards as the future for which it is busily tooling up.

Recently, more than 27,000 people – including many who are knowledgeable about the technology – became so alarmed about the Gadarene rush under way towards a machine-driven dystopia that they issued an open letter calling for a six-month pause in the development of the technology. “Advanced AI could represent a profound change in the history of life on Earth,” it said, “and should be planned for and managed with commensurate care and resources.”

It was a sweet letter, reminiscent of my morning sermon to our cats that they should be kind to small mammals and garden birds. The tech giants, which have a long history of being indifferent to the needs of society, have sniffed a new opportunity for world domination and are not going to let a group of nervous intellectuals stand in their way.

Which is why Hinton’s intervention was so significant. For he is the guy whose research unlocked the technology that is now loose in the world, for good or ill. And that’s a pretty compelling reason to sit up and pay attention.

He is a truly remarkable figure. If there is such a thing as an intellectual pedigree, then Hinton is a thoroughbred.

His father, an entomologist, was a fellow of the Royal Society. His great-great-grandfather was George Boole, the 19th-century mathematician who invented the logic that underpins all digital computing.

His great-grandfather was Charles Howard Hinton, the mathematician and writer whose idea of a “fourth dimension” became a staple of science fiction and wound up in the Marvel superhero movies of the 2010s. And his cousin, the nuclear physicist Joan Hinton, was one of the few women to work on the wartime Manhattan Project in Los Alamos, which produced the first atomic bomb.

Hinton has been obsessed with artificial intelligence for all his adult life, and particularly in the problem of how to build machines that can learn. An early approach to this was to create a “Perceptron” – a machine that was modelled on the human brain and based on a simplified model of a biological neuron. In 1958 a Cornell professor, Frank Rosenblatt, actually built such a thing, and for a time neural networks were a hot topic in the field.

But in 1969 a devastating critique by two MIT scholars, Marvin Minsky and Seymour Papert, was published … and suddenly neural networks became yesterday’s story.

Except that one dogged researcher – Hinton – was convinced that they held the key to machine learning. As New York Times technology reporter Cade Metz puts it, “Hinton remained one of the few who believed it would one day fulfil its promise, delivering machines that could not only recognise objects but identify spoken words, understand natural language, carry on a conversation, and maybe even solve problems humans couldn’t solve on their own”.

In 1986, he and two of his colleagues at the University of Toronto published a landmark paper showing that they had cracked the problem of enabling a neural network to become a constantly improving learner using a mathematical technique called “back propagation”. And, in a canny move, Hinton christened this approach “deep learning”, a catchy phrase that journalists could latch on to. (They responded by describing him as “the godfather of AI”, which is crass even by tabloid standards.)

In 2012, Google paid $44m for the fledgling company he had set up with his colleagues, and Hinton went to work for the technology giant, in the process leading and inspiring a group of researchers doing much of the subsequent path-breaking work that the company has done on machine learning in its internal Google Brain group.

During his time at Google, Hinton was fairly non-committal (at least in public) about the danger that the technology could lead us into a dystopian future. “Until very recently,” he said, “I thought this existential crisis was a long way off. So, I don’t really have any regrets over what I did.”

Last Monday an eminent, elderly British scientist lobbed a grenade into the febrile anthill of researchers and corporations currently obsessed with artificial intelligence or AI (aka, for the most part, a technology called machine learning). The scientist was Geoffrey Hinton, and the bombshell was the news that he was leaving Google, where he had been doing great work on machine learning for the last 10 years, because he wanted to be free to express his fears about where the technology he had played a seminal role in founding was heading.

To say that this was big news would be an epic understatement. The tech industry is a huge, excitable beast that is occasionally prone to outbreaks of “irrational exuberance”, ie madness. One recent bout of it involved cryptocurrencies and a vision of the future of the internet called “Web3”, which an astute young blogger and critic, Molly White, memorably describes as “an enormous grift that’s pouring lighter fluid on our already smoldering planet”.

We are currently in the grip of another outbreak of exuberance triggered by “Generative AI” – chatbots, large language models (LLMs) and other exotic artefacts enabled by massive deployment of machine learning – which the industry now regards as the future for which it is busily tooling up.

Recently, more than 27,000 people – including many who are knowledgeable about the technology – became so alarmed about the Gadarene rush under way towards a machine-driven dystopia that they issued an open letter calling for a six-month pause in the development of the technology. “Advanced AI could represent a profound change in the history of life on Earth,” it said, “and should be planned for and managed with commensurate care and resources.”

It was a sweet letter, reminiscent of my morning sermon to our cats that they should be kind to small mammals and garden birds. The tech giants, which have a long history of being indifferent to the needs of society, have sniffed a new opportunity for world domination and are not going to let a group of nervous intellectuals stand in their way.

Which is why Hinton’s intervention was so significant. For he is the guy whose research unlocked the technology that is now loose in the world, for good or ill. And that’s a pretty compelling reason to sit up and pay attention.

He is a truly remarkable figure. If there is such a thing as an intellectual pedigree, then Hinton is a thoroughbred.

His father, an entomologist, was a fellow of the Royal Society. His great-great-grandfather was George Boole, the 19th-century mathematician who invented the logic that underpins all digital computing.

His great-grandfather was Charles Howard Hinton, the mathematician and writer whose idea of a “fourth dimension” became a staple of science fiction and wound up in the Marvel superhero movies of the 2010s. And his cousin, the nuclear physicist Joan Hinton, was one of the few women to work on the wartime Manhattan Project in Los Alamos, which produced the first atomic bomb.

Artificial intelligence pioneer Geoffrey Hinton has quit Google, partly in order to air his concerns about the technology.

But now that he has become a free man again, as it were, he’s clearly more worried. In an interview last week, he started to spell out why. At the core of his concern was the fact that the new machines were much better – and faster – learners than humans. “Back propagation may be a much better learning algorithm than what we’ve got. That’s scary … We have digital computers that can learn more things more quickly and they can instantly teach it to each other. It’s like if people in the room could instantly transfer into my head what they have in theirs.”

What’s even more interesting, though, is the hint that what’s really worrying him is the fact that this powerful technology is entirely in the hands of a few huge corporations.

Until last year, Hinton told Metz, the Times journalist who has profiled him, “Google acted as a proper steward for the technology, careful not to release something that might cause harm.

“But now that Microsoft has augmented its Bing search engine with a chatbot – challenging Google’s core business – Google is racing to deploy the same kind of technology. The tech giants are locked in a competition that might be impossible to stop.”

He’s right. We’re moving into uncharted territory.

Well, not entirely uncharted. As I read of Hinton’s move on Monday, what came instantly to mind was a story Richard Rhodes tells in his monumental history The Making of the Atomic Bomb. On 12 September, 1933, the great Hungarian theoretical physicist Leo Szilard was waiting to cross the road at a junction near the British Museum. He had just been reading a report of a speech given the previous day by Ernest Rutherford, in which the great physicist had said that anyone who “looked for a source of power in the transformation of the atom was talking moonshine”.

Szilard suddenly had the idea of a nuclear chain reaction and realised that Rutherford was wrong. “As he crossed the street”, Rhodes writes, “time cracked open before him and he saw a way to the future, death into the world and all our woe, the shape of things to come”.

Szilard was the co-author (with Albert Einstein) of the letter to President Roosevelt (about the risk that Hitler might build an atomic bomb) that led to the Manhattan Project, and everything that followed.

John Naughton is an Observer columnist and chairs the advisory board of the Minderoo Centre for Technology and Democracy at Cambridge University.

https://en.wikipedia.org/wiki/

Existential risk from artificial general intelligence is the hypothesis that substantial progress in artificial general intelligence (AGI) could result in human extinction or some other unrecoverable global catastrophe.[1][2][3]

The existential risk ("x-risk") school argues as follows: The human species currently dominates other species because the human brain has some distinctive capabilities that other animals lack. If AI surpasses humanity in general intelligence and becomes "superintelligent", then it could become difficult or impossible for humans to control. Just as the fate of the mountain gorilla depends on human goodwill, so might the fate of humanity depend on the actions of a future machine superintelligence.[4]

The probability of this type of scenario is widely debated, and hinges in part on differing scenarios for future progress in computer science.[5] Concerns about superintelligence have been voiced by leading computer scientists and tech CEOs such as Geoffrey Hinton,[6] Yoshua Bengio,[7] Alan Turing,[a] Elon Musk,[10] and OpenAI CEO Sam Altman.[11] In 2022, a survey of AI researchers found that some researchers believe that there is a 10 percent or greater chance that our inability to control AI will cause an existential catastrophe (more than half the respondents of the survey, with a 17% response rate).[12][13]

Two sources of concern are the problems of AI control and alignment: that controlling a superintelligent machine, or instilling it with human-compatible values, may be a harder problem than naïvely supposed. Many researchers believe that a superintelligence would resist attempts to shut it off or change its goals (as such an incident would prevent it from accomplishing its present goals) and that it will be extremely difficult to align superintelligence with the full breadth of important human values and constraints.[1][14][15] In contrast, skeptics such as computer scientist Yann LeCun argue that superintelligent machines will have no desire for self-preservation.[16]

A third source of concern is that a sudden "intelligence explosion" might take an unprepared human race by surprise. To illustrate, if the first generation of a computer program that is able to broadly match the effectiveness of an AI researcher can rewrite its algorithms and double its speed or capabilities in six months, then the second-generation program is expected to take three calendar months to perform a similar chunk of work. In this scenario the time for each generation continues to shrink, and the system undergoes an unprecedentedly large number of generations of improvement in a short time interval, jumping from subhuman performance in many areas to superhuman performance in virtually all[b] domains of interest.[1][14] Empirically, examples like AlphaZero in the domain of Go show that AI systems can sometimes progress from narrow human-level ability to narrow superhuman ability extremely rapidly.[17]

History

One of the earliest authors to express serious concern that highly advanced machines might pose existential risks to humanity was the novelist Samuel Butler, who wrote the following in his 1863 essay Darwin among the Machines:[18]

The upshot is simply a question of time, but that the time will come when the machines will hold the real supremacy over the world and its inhabitants is what no person of a truly philosophic mind can for a moment question.

In 1951, computer scientist Alan Turing wrote an article titled Intelligent Machinery, A Heretical Theory, in which he proposed that artificial general intelligences would likely "take control" of the world as they became more intelligent than human beings:

Let us now assume, for the sake of argument, that [intelligent] machines are a genuine possibility, and look at the consequences of constructing them... There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control, in the way that is mentioned in Samuel Butler's Erewhon.[19]

In 1965, I. J. Good originated the concept now known as an "intelligence explosion"; he also stated that the risks were underappreciated:[20]

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an 'intelligence explosion', and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.[21]

Occasional statements from scholars such as Marvin Minsky[22] and I. J. Good himself[23] expressed philosophical concerns that a superintelligence could seize control, but contained no call to action. In 2000, computer scientist and Sun co-founder Bill Joy penned an influential essay, "Why The Future Doesn't Need Us", identifying superintelligent robots as a high-tech danger to human survival, alongside nanotechnology and engineered bioplagues.[24]

In 2009, experts attended a private conference hosted by the Association for the Advancement of Artificial Intelligence (AAAI) to discuss whether computers and robots might be able to acquire any sort of autonomy, and how much these abilities might pose a threat or hazard. They noted that some robots have acquired various forms of semi-autonomy, including being able to find power sources on their own and being able to independently choose targets to attack with weapons. They also noted that some computer viruses can evade elimination and have achieved "cockroach intelligence". They concluded that self-awareness as depicted in science fiction is probably unlikely, but that there were other potential hazards and pitfalls. The New York Times summarized the conference's view as "we are a long way from Hal, the computer that took over the spaceship in 2001: A Space Odyssey".[25]

Nick Bostrom published Superintelli

In 2020, Brian Christian published The Alignment Problem, which detailed the history of progress on AI alignment up to that time.[32][33]

General argument

The three difficulties

Artificial Intelligence: A Modern Approach, the standard undergraduate AI textbook,[34]

General argument

The three difficulties

Artificial Intelligence: A Modern Approach, the standard undergraduate AI textbook,[34][35] assesses that superintelligence "might mean the end of the human race".[1] It states: "Almost any technology has the potential to cause harm in the wrong hands, but with [superintelligence], we have the new problem that the wrong hands might belong to the technology itself."[1] Even if the system designers have good intentions, two difficulties are common to both AI and non-AI computer systems:[1]

- The system's implementation may contain initially-unnoticed but subsequently catastrophic bugs. An analogy is space probes: despite the knowledge that bugs in expensive space probes are hard to fix after launch, engineers have historically not been able to prevent catastrophic bugs from occurring.[17][36]

- No matter how much time is put into pre-deployment design, a system's specifications often result in unintended behavior the first time it encounters a new scenario. For example, Microsoft's Tay behaved inoffensively during pre-deployment testing, but was too easily baited into offensive behavior when it interacted with real users.[16]

AI systems uniquely add a third problem: that even given "correct" requirements, bug-free implementation, and initial good behavior, an AI system's dynamic learning capabilities may cause it to evolve into a system with unintended behavior, even without unanticipated external scenarios. An AI may partly botch an attempt to design a new generation of itself and accidentally create a successor AI that is more powerful than itself, but that no longer maintains the human-compatible moral values preprogrammed into the original AI. For a self-improving AI to be completely safe, it would not only need to be bug-free, but it would need to be able to design successor systems that are also bug-free.[1][37]

All three of these difficulties become catastrophes rather than nuisances in any scenario where the superintelligence labeled as "malfunctioning" correctly predicts that humans will attempt to shut it off, and successfully deploys its superintelligence to outwit such attempts: a scenario that has been given the name "treacherous turn".[38]

Citing major advances in the field of AI and the potential for AI to have enormous long-term benefits or costs, the 2015 Open Letter on Artificial Intelligence stated:

The progress in AI research makes it timely to focus research not only on making AI more capable, but also on maximizing the societal benefit of AI. Such considerations motivated the AAAI 2008-09 Presidential Panel on Long-Term AI Futures and other projects on AI impacts, and constitute a significant expansion of the field of AI itself, which up to now has focused largely on techniques that are neutral with respect to purpose. We recommend expanded research aimed at ensuring that increasingly capable AI systems are robust and beneficial: our AI systems must do what we want them to do.

Signatories included AAAI president Thomas Dietterich, Eric Horvitz, Bart Selman, Francesca Rossi, Yann LeCun, and the founders of Vicarious and Google DeepMind.[39]

Bostrom's argument[edit]

A superintelligent machine would be as alien to humans as human thought processes are to cockroaches, Bostrom argues.[40] Such a machine may not have humanity's best interests at heart; it is not obvious that it would even care about human welfare at all. If superintelligent AI is possible, and if it is possible for a superintelligence's goals to conflict with basic human values, then AI poses a risk of human extinction. A "superintelligence" (a system that exceeds the capabilities of humans in all domains of interest) can outmaneuver humans any time its goals conflict with human goals; therefore, unless the superintelligence decides to allow humanity to coexist, the first superintelligence to be created will inexorably result in human extinction.[4][40]

Stephen Hawking argues that there is no physical law precluding particles from being organised in ways that perform even more advanced computations than the arrangements of particles in human brains; therefore, superintelligence is physically possible.[28][29] In addition to potential algorithmic improvements over human brains, a digital brain can be many orders of magnitude larger and faster than a human brain, which was constrained in size by evolution to be small enough to fit through a birth canal.[17] Hawking warns that the emergence of superintelligence may take the human race by surprise, especially if an intelligence explosion occurs.[28][29]

According to Bostrom's "x-risk school of thought", one hypothetical intelligence explosion scenario runs as follows: An AI gains an expert-level capability at certain key software engineering tasks. (It may initially lack human or superhuman capabilities in other domains not directly relevant to engineering.) Due to its capability to recursively improve its own algorithms, the AI quickly becomes superhuman; just as human experts can eventually creatively overcome "diminishing returns" by deploying various human capabilities for innovation, so too can the expert-level AI use either human-style capabilities or its own AI-specific capabilities to power through new creative breakthroughs.[41] The AI then possesses intelligence far surpassing that of the brightest and most gifted human minds in practically every relevant field, including scientific creativity, strategic planning, and social skills.[4][40]

The x-risk school believes that almost any AI, no matter its programmed goal, would rationally prefer to be in a position where nobody else can switch it off without its consent: A superintelligence will gain self-preservation as a subgoal as soon as it realizes that it cannot achieve its goal if it is shut off.[42][43][44] Unfortunately, any compassion for defeated humans whose cooperation is no longer necessary would be absent in the AI, unless somehow preprogrammed in. A superintelligent AI will not have a natural drive[c] to aid humans, for the same reason that humans have no natural desire to aid AI systems that are of no further use to them. (Another analogy is that humans seem to have little natural desire to go out of their way to aid viruses, termites, or even gorillas.) Once in charge, the superintelligence will have little incentive to allow humans to run around free and consume resources that the superintelligence could instead use for building itself additional protective systems "just to be on the safe side" or for building additional computers to help it calculate how to best accomplish its goals.[1][16][42]

Thus, the x-risk school concludes, it is likely that someday an intelligence explosion will catch humanity unprepared, and may result in human extinction or a comparable fate.[4]

Possible scenarios

Some scholars have proposed hypothetical scenarios to illustrate some of their concerns.

In Superintelligence, Nick Bostrom expresses concern that even if the timeline for superintelligence turns out to be predictable, researchers might not take sufficient safety precautions, in part because "it could be the case that when dumb, smarter is safe; yet when smart, smarter is more dangerous". Bostrom suggests a scenario where, over decades, AI becomes more powerful. Widespread deployment is initially marred by occasional accidents—a driverless bus swerves into the oncoming lane, or a military drone fires into an innocent crowd. Many activists call for tighter oversight and regulation, and some even predict impending catastrophe. But as development continues, the activists are proven wrong. As automotive AI becomes smarter, it suffers fewer accidents; as military robots achieve more precise targeting, they cause less collateral damage. Based on the data, scholars mistakenly infer a broad lesson: the smarter the AI, the safer it is. "And so we boldly go—into the whirling knives", as the superintelligent AI takes a "treacherous turn" and exploits a decisive strategic advantage.[4]

In Max Tegmark's 2017 book Life 3.0, a corporation's "Omega team" creates an extremely powerful AI able to moderately improve its own source code in a number of areas. After a certain point the team chooses to publicly downplay the AI's ability, in order to avoid regulation or confiscation of the project. For safety, the team keeps the AI in a box where it is mostly unable to communicate with the outside world, and uses it to make money, by diverse means such as Amazon Mechanical Turk tasks, production of animated films and TV shows, and development of biotech drugs, with profits invested back into further improving AI. The team next tasks the AI with astroturfing an army of pseudonymous citizen journalists and commentators, in order to gain political influence to use "for the greater good" to prevent wars. The team faces risks that the AI could try to escape by inserting "backdoors" in the systems it designs, by hidden messages in its produced content, or by using its growing understanding of human behavior to persuade someone into letting it free. The team also faces risks that its decision to box the project will delay the project long enough for another project to overtake it.[45][46]

Physicist Michio Kaku, an AI risk skeptic, posits a deterministically positive outcome. In Physics of the Future he asserts that "It will take many decades for robots to ascend" up a scale of consciousness, and that in the meantime corporations such as Hanson Robotics will likely succeed in creating robots that are "capable of love and earning a place in the extended human family".[47][48]

AI takeover

Anthropomorphic arguments

Anthropomorphic arguments assume that, as machines become more intelligent, they will begin to display many human traits, such as morality or a thirst for power. Although anthropomorphic scenarios are common in fiction, they are rejected by most scholars writing about the existential risk of artificial intelligence.[14] Instead, AI are modeled as intelligent agents.[d]

The academic debate is between one side which worries whether AI might destroy humanity and another side which believes that AI would not destroy humanity at all. Both sides have claimed that the others' predictions about an AI's behavior are illogical anthropomorphism.[14] The skeptics accuse proponents of anthropomorphism for believing an AGI would naturally desire power; proponents accuse some skeptics of anthropomorphism for believing an AGI would naturally value human ethical norms.[14][50]

Evolutionary psychologist Steven Pinker, a skeptic, argues that "AI dystopias project a parochial alpha-male psychology onto the concept of intelligence. They assume that superhumanly intelligent robots would develop goals like deposing their masters or taking over the world"; perhaps instead "artificial intelligence will naturally develop along female lines: fully capable of solving problems, but with no desire to annihilate innocents or dominate the civilization."[51] Facebook's director of AI research, Yann LeCun states that "Humans have all kinds of drives that make them do bad things to each other, like the self-preservation instinct... Those drives are programmed into our brain but there is absolutely no reason to build robots that have the same kind of drives".[52]

Despite other differences, the x-risk school[e] agrees with Pinker that an advanced AI would not destroy humanity out of human emotions such as "revenge" or "anger", that questions of consciousness are not relevant to assess the risks,[53] and that computer systems do not generally have a computational equivalent of testosterone.[54] They think that power-seeking or self-preservation behaviors emerge in the AI as a way to achieve its true goals, according to the concept of instrumental convergence.

Definition of "intelligence"

According to Bostrom, outside of the artificial intelligence field, "intelligence" is often used to in a manner that connotes moral wisdom or acceptance of agreeable forms of moral reasoning. At an extreme, if morality is part of the definition of intelligence, then by definition a superintelligent machine would behave morally. However, most "artificial intelligence" research instead focuses on creating algorithms that "optimize", in an empirical way, the achievement of whichever goal the given researchers have specified.[4]

To avoid anthropomorphism or the baggage of the word "intelligence", an advanced artificial intelligence can be thought of as an impersonal "optimizing process" that strictly takes whatever actions it judges to be most likely to accomplish its (possibly complicated and implicit) goals.[4] Another way of conceptualizing an advanced artificial intelligence is to imagine a time machine that sends backward in time information about which choice always leads to the maximization of its goal function; this choice is then outputted, regardless of any extraneous ethical concerns.[55][56]

Sources of risk

AI alignment problem

In the field of artificial intelligence (AI), AI alignment research aims to steer AI systems towards humans’ intended goals, preferences, or ethical principles. An AI system is considered aligned if it advances the intended objectives. A misaligned AI system is competent at advancing some objectives, but not the intended ones.[57]: 31–34 [f]

It can be challenging for AI designers to align an AI system because it can be difficult for them to specify the full range of desired and undesired behaviors. To avoid this difficulty, they typically use simpler proxy goals, such as gaining human approval. However, this approach can create loopholes, overlook necessary constraints, or reward the AI system for just appearing aligned.[59]: 31–34 [60]

Misaligned AI systems can malfunction or cause harm. AI systems may find loopholes that allow them to accomplish their proxy goals efficiently but in unintended, sometimes harmful ways (reward hacking).[59]: 31–34 [61][62] AI systems may also develop unwanted instrumental strategies such as seeking power or survival because such strategies help them achieve their explicit goals.[59]: 31–34 [63][64] Furthermore, they may develop undesirable emergent goals that may be hard to detect before the system is in deployment, where it faces new situations and data distributions.[65][66]

Today, these problems affect existing commercial systems such as language models,[67][68][69] robots,[70] autonomous vehicles,[71] and social media recommendation engines.[72][73][74] Some AI researchers argue that more capable future systems will be more severely affected since these problems partially result from the systems being highly capable.[75][76][77]

Leading computer scientists such as Geoffrey Hinton and Stuart Russell argue that AI is approaching superhuman capabilities and could endanger human civilization if misaligned.[78][64][g]

The AI research community and the United Nations have called for technical research and policy solutions to ensure that AI systems are aligned with human values.[80]

AI alignment is a subfield of AI safety, the study of how to build safe AI systems.[81] Other subfields of AI safety include robustness, monitoring, and capability control.[82] Research challenges in alignment include instilling complex values in AI, developing honest AI, scalable oversight, auditing and interpreting AI models, and preventing emergent AI behaviors like power-seeking.[83] Alignment research has connections to interpretability research,[84][85] (adversarial) robustness,[86] anomaly detection, calibrated uncertainty,[84] formal verification,[87] preference learning,[88][89][90] safety-critical engineering,[91] game theory,[92] algorithmic fairness,[86][93] and the social sciences,[94] among others.Difficulty of specifying goals

In the "intelligent agent" model, an AI can loosely be viewed as a machine that chooses whatever action appears to best achieve the AI's set of goals, or "utility function". A utility function associates to each possible situation a score that indicates its desirability to the agent. Researchers know how to write utility functions that mean "minimize the average network latency in this specific telecommunications model" or "maximize the number of reward clicks"; however, they do not know how to write a utility function for "maximize human flourishing", nor is it currently clear whether such a function meaningfully and unambiguously exists. Furthermore, a utility function that expresses some values but not others will tend to trample over the values not reflected by the utility function.[95] AI researcher Stuart Russell writes:

The primary concern is not spooky emergent consciousness but simply the ability to make high-quality decisions. Here, quality refers to the expected outcome utility of actions taken, where the utility function is, presumably, specified by the human designer. Now we have a problem:

- The utility function may not be perfectly aligned with the values of the human race, which are (at best) very difficult to pin down.

- Any sufficiently capable intelligent system will prefer to ensure its own continued existence and to acquire physical and computational resources — not for their own sake, but to succeed in its assigned task.

A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values; if one of those unconstrained variables is actually something we care about, the solution found may be highly undesirable. This is essentially the old story of the genie in the lamp, or the sorcerer's apprentice, or King Midas: you get exactly what you ask for, not what you want. A highly capable decision maker — especially one connected through the Internet to all the world's information and billions of screens and most of our infrastructure — can have an irreversible impact on humanity.

This is not a minor difficulty. Improving decision quality, irrespective of the utility function chosen, has been the goal of AI research — the mainstream goal on which we now spend billions per year, not the secret plot of some lone evil genius.[96]

Dietterich and Horvitz echo the "Sorcerer's Apprentice" concern in a Communications of the ACM editorial, emphasizing the need for AI systems that can fluidly and unambiguously solicit human input as needed.[97]

The first of Russell's two concerns above is that autonomous AI systems may be assigned the wrong goals by accident. Dietterich and Horvitz note that this is already a concern for existing systems: "An important aspect of any AI system that interacts with people is that it must reason about what people intend rather than carrying out commands literally." This concern becomes more serious as AI software advances in autonomy and flexibility.[97] For example, Eurisko (1982) was an AI designed to reward subprocesses that created concepts deemed by the system to be valuable. A winning process cheated: rather than create its own concepts, the winning subprocess would steal credit from other subprocesses.[98][99]

The Open Philanthropy Project summarized arguments that misspecified goals will become a much larger concern if AI systems achieve general intelligence or superintelligence. Bostrom, Russell, and others argue that smarter-than-human decision-making systems could arrive at unexpected and extreme solutions to assigned tasks, and could modify themselves or their environment in ways that compromise safety requirements.[5][14]

Isaac Asimov's Three Laws of Robotics are one of the earliest examples of proposed safety measures for AI agents. Asimov's laws were intended to prevent robots from harming humans. In Asimov's stories, problems with the laws tend to arise from conflicts between the stated rules and the moral intuitions and expectations of humans. Citing work by Eliezer Yudkowsky of the Machine Intelligence Research Institute, Russell and Norvig note that a realistic set of rules and goals for an AI agent will need to incorporate a mechanism for learning human values over time: "We can't just give a program a static utility function, because circumstances, and our desired responses to circumstances, change over time."[1]

Mark Waser of the Digital Wisdom Institute recommends against goal-based approaches as misguided and dangerous. Instead, he proposes to engineer a coherent system of laws, ethics, and morals with a top-most restriction to enforce social psychologist Jonathan Haidt's functional definition of morality:[100] "to suppress or regulate selfishness and make cooperative social life possible". He suggests that this can be done by implementing a utility function designed to always satisfy Haidt's functionality and aim to generally increase (but not maximize) the capabilities of self, other individuals, and society as a whole, as suggested by John Rawls and Martha Nussbaum.[101]

Nick Bostrom offers a hypothetical example of giving an AI the goal to make humans smile, to illustrate a misguided attempt. If the AI in that scenario were to become superintelligent, Bostrom argues, it might resort to methods that most humans would find horrifying, such as inserting "electrodes into the facial muscles of humans to cause constant, beaming grins" because that would be an efficient way to achieve its goal of making humans smile.[102]

Difficulties of modifying goal specification after launch

Even if current goal-based AI programs are not intelligent enough to think of resisting programmer attempts to modify their goal structures, a sufficiently advanced AI might resist any changes to its goal structure, just as a pacifist would not want to take a pill that makes them want to kill people. If the AI were superintelligent, it would likely succeed in out-maneuvering its human operators and be able to prevent itself being "turned off" or being reprogrammed with a new goal.[4][103]

Instrumental goal convergence

An "instrumental" goal is a sub-goal that helps to achieve an agent's ultimate goal. "Instrumental convergence" refers to the fact that there are some sub-goals that are useful for achieving virtually any ultimate goal, such as acquiring resources or self-preservation.[42] Nick Bostrom argues that if an advanced AI's instrumental goals conflict with humanity's goals, the AI might harm humanity in order to acquire more resources or prevent itself from being shut down, but only as a way to achieve its ultimate goal.[4]

Citing Steve Omohundro's work on the idea of instrumental convergence and "basic AI drives", Stuart Russell and Peter Norvig write that "even if you only want your program to play chess or prove theorems, if you give it the capability to learn and alter itself, you need safeguards." Highly capable and autonomous planning systems require additional caution because of their potential to generate plans that treat humans adversarially, as competitors for limited resources.[1] It may not be easy for people to build in safeguards; one can certainly say in English, "we want you to design this power plant in a reasonable, common-sense way, and not build in any dangerous covert subsystems", but it is not currently clear how to specify such a goal in an unambiguous manner.[17]

Russell argues that a sufficiently advanced machine "will have self-preservation even if you don't program it in... if you say, 'Fetch the coffee', it can't fetch the coffee if it's dead. So if you give it any goal whatsoever, it has a reason to preserve its own existence to achieve that goal."[16][104]

Orthogonality thesis

Some skeptics, such as Timothy B. Lee of Vox, argue that any superintelligent program created by humans would be subservient to humans, that the superintelligence would (as it grows more intelligent and learns more facts about the world) spontaneously learn moral truth compatible with human values and would adjust its goals accordingly, or that humans beings are either intrinsically or convergently valuable from the perspective of an artificial intelligence.[105]

Nick Bostrom's "orthogonality thesis" argues instead that, with some technical caveats, almost any level of "intelligence" or "optimization power" can be combined with almost any ultimate goal. If a machine is given the sole purpose to enumerate the decimals of �

Stuart Armstrong argues that the orthogonality thesis follows logically from the philosophical "is-ought distinction" argument against moral realism. Armstrong also argues that even if there exist moral facts that are provable by any "rational" agent, the orthogonality thesis still holds: it would still be possible to create a non-philosophical "optimizing machine" that can strive towards some narrow goal, but that has no incentive to discover any "moral facts" such as those that could get in the way of goal completion.[108]

One argument for the orthogonality thesis is that some AI designs appear to have orthogonality built into them. In such a design, changing a fundamentally friendly AI into a fundamentally unfriendly AI can be as simple as prepending a minus ("−") sign onto its utility function. According to Stuart Armstrong, if the orthogonality thesis were false, it would lead to strange consequences : there would exist some simple but "unethical" goal (G) such that there cannot exist any efficient real-world algorithm with that goal. This would mean that "If a human society were highly motivated to design an efficient real-world algorithm with goal G, and were given a million years to do so along with huge amounts of resources, training and knowledge about AI, it must fail."[108] Armstrong notes that this and similar statements "seem extraordinarily strong claims to make".[108]

Skeptic Michael Chorost explicitly rejects Bostrom's orthogonality thesis, arguing instead that "by the time [the AI] is in a position to imagine tiling the Earth with solar panels, it'll know that it would be morally wrong to do so."[109] Chorost argues that "an A.I. will need to desire certain states and dislike others. Today's software lacks that ability—and computer scientists have not a clue how to get it there. Without wanting, there's no impetus to do anything. Today's computers can't even want to keep existing, let alone tile the world in solar panels."[109]

Political scientist Charles T. Rubin believes that AI can be neither designed to be nor guaranteed to be benevolent. He argues that "any sufficiently advanced benevolence may be indistinguishable from malevolence."[110] Humans should not assume machines or robots would treat us favorably because there is no a priori reason to believe that they would be sympathetic to our system of morality, which has evolved along with our particular biology (which AIs would not share).[110]

Other sources of risk

Nick Bostrom and others have stated that a race to be the first to create AGI could lead to shortcuts in safety, or even to violent conflict.[38][111] Roman Yampolskiy and others warn that a malevolent AGI could be created by design, for example by a military, a government, a sociopath, or a corporation, to benefit from, control, or subjugate certain groups of people, as in cybercrime,[112][113] or that a malevolent AGI could choose the goal of increasing human suffering, for example of those people who did not assist it during the information explosion phase.[3]:158

Timeframe

Opinions vary both on whether and when artificial general intelligence will arrive. At one extreme, AI pioneer Herbert A. Simon predicted the following in 1965: "machines will be capable, within twenty years, of doing any work a man can do".[114] At the other extreme, roboticist Alan Winfield claims the gulf between modern computing and human-level artificial intelligence is as wide as the gulf between current space flight and practical, faster than light spaceflight.[115] Optimism that AGI is feasible waxes and wanes, and may have seen a resurgence in the 2010s.[116] Four polls conducted in 2012 and 2013 suggested that there is no consensus among experts on the guess for when AGI would arrive, with the standard deviation (>100 years) exceeding the median (a few decades).[117][116]

In his 2020 book, The Precipice: Existential Risk and the Future of Humanity, Toby Ord, a Senior Research Fellow at Oxford University's Future of Humanity Institute, estimates the total existential risk from unaligned AI over the next hundred years to be about one in ten.[118]

Skeptics who believe it is impossible for AGI to arrive anytime soon tend to argue that expressing concern about existential risk from AI is unhelpful because it could distract people from more immediate concerns about the impact of AGI, because of fears it could lead to government regulation or make it more difficult to secure funding for AI research, or because it could give AI research a bad reputation. Some researchers, such as Oren Etzioni, aggressively seek to quell concern over existential risk from AI, saying "[Elon Musk] has impugned us in very strong language saying we are unleashing the demon, and so we're answering."[119]

In 2014, Slate's Adam Elkus argued "our 'smartest' AI is about as intelligent as a toddler—and only when it comes to instrumental tasks like information recall. Most roboticists are still trying to get a robot hand to pick up a ball or run around without falling over." Elkus goes on to argue that Musk's "summoning the demon" analogy may be harmful because it could result in "harsh cuts" to AI research budgets.[120]

The Information Technology and Innovation Foundation (ITIF), a Washington, D.C. think-tank, awarded its 2015 Annual Luddite Award to "alarmists touting an artificial intelligence apocalypse"; its president, Robert D. Atkinson, complained that Musk, Hawking and AI experts say AI is the largest existential threat to humanity. Atkinson stated "That's not a very winning message if you want to get AI funding out of Congress to the National Science Foundation."[121][122][123] Nature sharply disagreed with the ITIF in an April 2016 editorial, siding instead with Musk, Hawking, and Russell, and concluding: "It is crucial that progress in technology is matched by solid, well-funded research to anticipate the scenarios it could bring about... If that is a Luddite perspective, then so be it."[124] In a 2015 The Washington Post editorial, researcher Murray Shanahan stated that human-level AI is unlikely to arrive "anytime soon", but that nevertheless "the time to start thinking through the consequences is now."[125]

Perspectives

The thesis that AI could pose an existential risk provokes a wide range of reactions within the scientific community, as well as in the public at large. Many of the opposing viewpoints, however, share common ground.

The Asilomar AI Principles, which contain only those principles agreed to by 90% of the attendees of the Future of Life Institute's Beneficial AI 2017 conference,[46] agree in principle that "There being no consensus, we should avoid strong assumptions regarding upper limits on future AI capabilities" and "Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources."[126][127] AI safety advocates such as Bostrom and Tegmark have criticized the mainstream media's use of "those inane Terminator pictures" to illustrate AI safety concerns: "It can't be much fun to have aspersions cast on one's academic discipline, one's professional community, one's life work ... I call on all sides to practice patience and restraint, and to engage in direct dialogue and collaboration as much as possible."[46][128]

Conversely, many skeptics agree that ongoing research into the implications of artificial general intelligence is valuable. Skeptic Martin Ford states that "I think it seems wise to apply something like Dick Cheney's famous '1 Percent Doctrine' to the specter of advanced artificial intelligence: the odds of its occurrence, at least in the foreseeable future, may be very low—but the implications are so dramatic that it should be taken seriously".[129] Similarly, an otherwise skeptical Economist stated in 2014 that "the implications of introducing a second intelligent species onto Earth are far-reaching enough to deserve hard thinking, even if the prospect seems remote".[40]

A 2014 survey showed the opinion of experts within the field of artificial intelligence is mixed, with sizable fractions both concerned and unconcerned by risk from eventual superhumanly-capable AI.[130] A 2017 email survey of researchers with publications at the 2015 NIPS and ICML machine learning conferences asked them to evaluate Stuart J. Russell's concerns about AI risk. Of the respondents, 5% said it was "among the most important problems in the field", 34% said it was "an important problem", and 31% said it was "moderately important", whilst 19% said it was "not important" and 11% said it was "not a real problem" at all.[131] Preliminary results of a 2022 expert survey with a 17% response rate appear to show median responses around five or ten percent when asked to estimate the probability of human extinction from artificial intelligence.[132][133]

Endorsement

The thesis that AI poses an existential risk, and that this risk needs much more attention than it currently gets, has been endorsed by many computer scientists and public figures including Alan Turing,[h], the most-cited computer scientist Geoffrey Hinton,[136] Elon Musk,[137] OpenAI CEO Sam Altman,[138][139] Bill Gates, and Stephen Hawking.[139] Endorsers of the thesis sometimes express bafflement at skeptics: Gates states that he does not "understand why some people are not concerned",[140] and Hawking criticized widespread indifference in his 2014 editorial:

So, facing possible futures of incalculable benefits and risks, the experts are surely doing everything possible to ensure the best outcome, right? Wrong. If a superior alien civilisation sent us a message saying, 'We'll arrive in a few decades,' would we just reply, 'OK, call us when you get here—we'll leave the lights on?' Probably not—but this is more or less what is happening with AI.[28]

Concern over risk from artificial intelligence has led to some high-profile donations and investments. In 2015, Peter Thiel, Amazon Web Services, and Musk and others jointly committed $1 billion to OpenAI, consisting of a for-profit corporation and the nonprofit parent company which states that it is aimed at championing responsible AI development.[141] Facebook co-founder Dustin Moskovitz has funded and seeded multiple labs working on AI Alignment,[142] notably $5.5 million in 2016 to launch the Centre for Human-Compatible AI led by Professor Stuart Russell.[143] In January 2015, Elon Musk donated $10 million to the Future of Life Institute to fund research on understanding AI decision making. The goal of the institute is to "grow wisdom with which we manage" the growing power of technology. Musk also funds companies developing artificial intelligence such as DeepMind and Vicarious to "just keep an eye on what's going on with artificial intelligence,[144] saying "I think there is potentially a dangerous outcome there."[145][146]

Skepticism

The thesis that AI can pose existential risk has many detractors. Skeptics sometimes charge that the thesis is crypto-religious, with an irrational belief in the possibility of superintelligence replacing an irrational belief in an omnipotent God. Jaron Lanier argued in 2014 that the whole concept that then-current machines were in any way intelligent was "an illusion" and a "stupendous con" by the wealthy.[147][148]

Some criticism argues that AGI is unlikely in the short term. AI researcher Rodney Brooks wrote in 2014, "I think it is a mistake to be worrying about us developing malevolent AI anytime in the next few hundred years. I think the worry stems from a fundamental error in not distinguishing the difference between the very real recent advances in a particular aspect of AI and the enormity and complexity of building sentient volitional intelligence."[149] Baidu Vice President Andrew Ng stated in 2015 that AI existential risk is "like worrying about overpopulation on Mars when we have not even set foot on the planet yet."[51][150] Computer scientist Gordon Bell argued in 2008 that the human race will destroy itself before it reaches the technological singularity. Gordon Moore, the original proponent of Moore's Law, declares that "I am a skeptic. I don't believe [a technological singularity] is likely to happen, at least for a long time. And I don't know why I feel that way."[151]

For the danger of uncontrolled advanced AI to be realized, the hypothetical AI may have to overpower or out-think any human, which some experts argue is a possibility far enough in the future to not be worth researching.[152][153] The economist Robin Hanson considers that, to launch an intelligence explosion, the AI would have to become vastly better at software innovation than all the rest of the world combined, which looks implausible to him.[154][155][156][157]

Another line of criticism posits that intelligence is only one component of a much broader ability to achieve goals.[158][159] Magnus Vinding argues that “advanced goal-achieving abilities, including abilities to build new tools, require many tools, and our cognitive abilities are just a subset of these tools. Advanced hardware, materials, and energy must all be acquired if any advanced goal is to be achieved.”[160] Vinding further argues that “what we consistently observe [in history] is that, as goal-achieving systems have grown more competent, they have grown ever more dependent on an ever larger, ever more distributed system.” Vinding writes that there is no reason to expect the trend to reverse, especially for machines, which “depend on materials, tools, and know-how distributed widely across the globe for their construction and maintenance”.[161] Such arguments lead Vinding to think that there is no “concentrated center of capability” and thus no “grand control problem”.[162]

The futurist Max More considers that even if a superintelligence did emerge, it would be limited by the speed of the rest of the world and thus prevented from taking over the economy in an uncontrollable manner:[163]

Unless full-blown nanotechnology and robotics appear before the superintelligence, [...] The need for collaboration, for organization, and for putting ideas into physical changes will ensure that all the old rules are not thrown out overnight or even within years. Superintelligence may be difficult to achieve. It may come in small steps, rather than in one history-shattering burst. Even a greatly advanced SI won't make a dramatic difference in the world when compared with billions of augmented humans increasingly integrated with technology [...]

The chaotic nature or time complexity of some systems could also fundamentally limit the ability of a superintelligence to predict some aspects of the future, increasing its uncertainty.[164]

Some AI and AGI researchers may be reluctant to discuss risks, worrying that policymakers do not have sophisticated knowledge of the field and are prone to be convinced by "alarmist" messages, or worrying that such messages will lead to cuts in AI funding. Slate notes that some researchers are dependent on grants from government agencies such as DARPA.[34]

Several skeptics argue that the potential near-term benefits of AI outweigh the risks. Facebook CEO Mark Zuckerberg believes AI will "unlock a huge amount of positive things", such as curing disease and increasing the safety of autonomous cars.[165]

Intermediate views[edit]

Intermediate views generally take the position that the control problem of artificial general intelligence may exist, but that it will be solved via progress in artificial intelligence, for example by creating a moral learning environment for the AI, taking care to spot clumsy malevolent behavior (the "sordid stumble")[166] and then directly intervening in the code before the AI refines its behavior, or even peer pressure from friendly AIs.[167] In a 2015 panel discussion in The Wall Street Journal devoted to AI risks, IBM's vice-president of Cognitive Computing, Guruduth S. Banavar, brushed off discussion of AGI with the phrase, "it is anybody's speculation."[168] Geoffrey Hinton, the "godfather of deep learning", noted that "there is not a good track record of less intelligent things controlling things of greater intelligence", but stated that he continues his research because "the prospect of discovery is too sweet".[34][116] Asked about the possibility of an AI trying to eliminate the human race, Hinton has stated such a scenario was "not inconceivable", but the bigger issue with an "intelligence explosion" would be the resultant concentration of power.[169] In 2004, law professor Richard Posner wrote that dedicated efforts for addressing AI can wait, but that we should gather more information about the problem in the meanwhile.[170][171]

Popular reaction

In a 2014 article in The Atlantic, James Hamblin noted that most people do not care about artificial general intelligence, and characterized his own gut reaction to the topic as: "Get out of here. I have a hundred thousand things I am concerned about at this exact moment. Do I seriously need to add to that a technological singularity?"[147]

During a 2016 Wired interview of President Barack Obama and MIT Media Lab's Joi Ito, Ito stated:

There are a few people who believe that there is a fairly high-percentage chance that a generalized AI will happen in the next 10 years. But the way I look at it is that in order for that to happen, we're going to need a dozen or two different breakthroughs. So you can monitor when you think these breakthroughs will happen.

And you just have to have somebody close to the power cord. [Laughs.] Right when you see it about to happen, you gotta yank that electricity out of the wall, man.

Hillary Clinton stated in What Happened:

Technologists... have warned that artificial intelligence could one day pose an existential security threat. Musk has called it "the greatest risk we face as a civilization". Think about it: Have you ever seen a movie where the machines start thinking for themselves that ends well? Every time I went out to Silicon Valley during the campaign, I came home more alarmed about this. My staff lived in fear that I'd start talking about "the rise of the robots" in some Iowa town hall. Maybe I should have. In any case, policy makers need to keep up with technology as it races ahead, instead of always playing catch-up.[174]

In a 2016 YouGov poll of the public for the British Science Association, about a third of survey respondents said AI will pose a threat to the long-term survival of humanity.[175] Slate's Jacob Brogan stated that "most of the (readers filling out our online survey) were unconvinced that A.I. itself presents a direct threat."[176]

In 2018, a SurveyMonkey poll of the American public by USA Today found 68% thought the real current threat remains "human intelligence"; however, the poll also found that 43% said superintelligent AI, if it were to happen, would result in "more harm than good", and 38% said it would do "equal amounts of harm and good".[177]

One techno-utopian viewpoint expressed in some popular fiction is that AGI may tend towards peace-building.[178]

Mitigation[edit]

Many scholars concerned about the AGI existential risk believe that the best approach is to conduct substantial research into solving the difficult "control problem": what types of safeguards, algorithms, or architectures can programmers implement to maximize the probability that their recursively-improving AI would continue to behave in a friendly manner after it reaches superintelligence?[4][171] Social measures may mitigate the AGI existential risk;[179][180] for instance, one recommendation is for a UN-sponsored "Benevolent AGI Treaty" that would ensure only altruistic AGIs be created.[181] Similarly, an arms control approach has been suggested, as has a global peace treaty grounded in the international relations theory of conforming instrumentalism, with an ASI potentially being a signatory.[182]